How to Properly Prioritize Technical Fixes for Site Architecture

I've been in enough technical SEO audits to know the feeling. You open up ScreamingFrog or your crawler of choice, and suddenly you're staring at different issues spanning from pagination issues to missing canonicals to duplicate content nightmares. Here's the thing though, not all technical fixes are created equal. Some will move the needle on your organic visibility. Others? They're just nice-to-haves.

Before you touch a single line of code or submit your first ticket to dev, you need to be honest on the impact of the site's issue and the effort it takes to successfully fix it. And remember, the foundations of SEO is still important.

Sometimes and maybe even most times it's not about how long the fix takes, its about dev resources, and potential risks it can cause. So a nice sweet spot? High impact, low effort fixes.

The Framework

First, I begin by running an audit on ScreamingFrog and SEMRush to compare their results. Alongside with checking the /robots.txt file and the website's sitemap.

Running an audit on these platforms allows me to see the overlap which is good to take note of. You dont have to use these platforms, these are mine of choice.

I export all issues from both tools and begin by categorizing them. When choosing the severity please understand the client and website you are will be different foreach one.

I made quick example below. Very simple, do not over complicate.

After having your list of issues, estimate the effort it will take to fix the issues. Considering the capabilities and resources at hand this enable yourself to be better suited when it's time to disburse into action.

Pro Tip: Add another column that takes you to all the pages being affected when exported by your technical tool.

Issue | Alignment | Severity | Effort | Resource |

|---|---|---|---|---|

167 Internal Links Broken | Crawlability | High | 3 hours | Link |

15 Pages with Underscores in URL | Indexability | Low | 1 hour | Link |

5 Pages with Canonicals Missing | Indexability | High | 1 hour + dev resources | Link |

The Foundational Fixes

These are your "house is on fire" issues. If you don't fix these, nothing else matters.

Crawlability and Indexability Issues

Crawlability is the ability for search engine bots to access and navigate your website's pages. This is the first step because a GoogleBot must be able to find and read the content in order to proceed to the next step, indexability.

How To Improve It | |

|---|---|

Site Structure | Making sure your site structure is logical with clear internal linking, making it easy for bots to travel and find pages. Site structure includes, headings, URL structure, and even breadcrumbs can help. |

Robots.txt File | Ensure the robots.txt file is not blocking certain crawlers from crawling your important pages |

Sitemaps | First clean out any pages that should not be crawled with a "noindex", then submit it to Google Search Console |

Broken links (internal & external) | Prevent from having any links on your site going to a 404 or a redirect from an old URL link (less harmful) |

Check your robots.txt file, I've seen too many sites accidentally blocking entire sections because someone added a disallow rule three years ago and forgot about it.

Then look at your XML sitemaps. Are they actually being submitted? Are they updated? Are you including pages that shouldn't be indexed? Your sitemap should be a clean roadmap of what you want Google to see, not a messy directory dump.

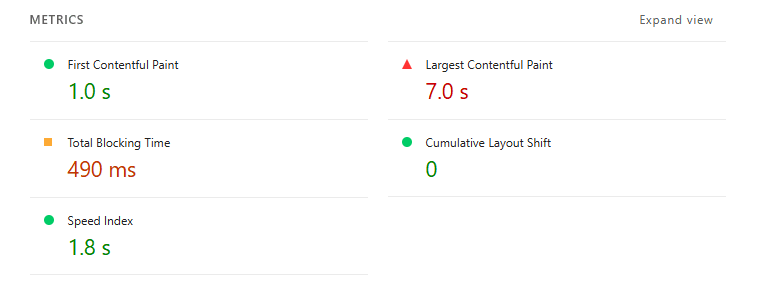

Critical Page Speed and Core Web Vitals

Google cares about Core Web Vitals, and so should you. But here's what most people get wrong… you don't need to optimize every single page to perfection on day one. Focus on your money pages first. Your top landing pages, your high-converting product pages, your main service pages should be priority. Understanding this will allow you to prioritize your fixes even better.

How To Improve This | |

|---|---|

Optimize Images | User modern formats like WebP and AVIF, compress them using Tiny PNG or a Wordpress Plugin, resize them for different devices, and implement lazy loading for images that are not immediately visible. |

Improve Server Response Time | Upgrade your hosting, use a content delivery network (CDN), and optimize server-side code. |

Reduce Render-Blocking Resources | Defer non-critical CSS and JavaScript files. You can also inline critical CSS to display the essential content first. |

Preload Important Resources | Use the preload attribute to tell the browser to download high-priority assets, like the LCP image, earlier in the loading process. |

Improve Your Site Architecture

Once your foundation is solid, it's time to tackle structural issues that affect how search engines understand and navigate your site.

URL Structure and Internal Linking

Your URL structure should tell both users and search engines what to expect. Shallow architecture beats deep architecture almost every time. If users need to click five levels deep to reach a product page, you have a problem.

Audit your internal linking strategy. Are you linking to important pages from high-authority pages? Are you using descriptive anchor text? Are orphan pages floating around with zero internal links pointing to them? Fix the linking structure, and you'll see how the crawl budget gets distributed more effectively across your important pages.

Duplicate Content and Canonicalization

Duplicate content is one of those issues that sounds scarier than it usually is, but it can still cause problems. The fix isn't always removing content—sometimes it's implementing canonical tags properly so Google knows which version to index.

Check for duplicate title tags and meta descriptions too. These might not be direct ranking factors, but they affect click-through rates from the SERPs, which definitely impacts your traffic

Faceted Navigation (For E-Commerce Sites)

If you're running an e-commerce site improving SEO, faceted navigation is probably creating thousands of URL variations. Color filters, size filters, price ranges—each combination potentially creates a new URL. This dilutes your crawl budget and creates thin content pages.

The solution usually involves a combination of canonicalization, strategic use of noindex tags, and robots.txt rules. But be careful here—this is where "effort" starts to climb, and you need dev resources who understand the implications.

The Nice-To-Haves (When You Have Time)

These fixes improve things, but they're not going to make or break your organic performance. Tackle these after your foundation and architecture are solid.

Schema Markup Implementation

Structured data helps search engines understand your content better and can earn you rich results in the SERPs. With AI models such as ChatGPT and Gemini, structured data is as important now than ever before. Even the future of local SEO is AI and implementing schema markup that search engines can understand can better your chances of becoming or staying visible.

But let's be real—it's not going to rescue a site with fundamental crawlability or content issues.

Start with the basics: Organization schema, LocalBusiness (if applicable), Product schema for e-commerce sites. Then expand to Review schema, FAQ schema, and others as you have bandwidth.

Redirect Chain Cleanup

Redirect chains (page A → page B → page C) slow down page loads and waste crawl budget. They should be fixed, but they're usually not urgent unless you have hundreds of them or they're affecting critical user journeys.

Hreflang Implementation

If you're targeting multiple countries or languages, hreflang tags tell Google which version to show to which users. Critical for international sites, and regional if you're someone like me who was born in Miami, and understand the majority of the people there speak primarily Spanish. This is a case by case task where you must know if it's right for your site.

The Biggest Mistake Most People Make

They try to fix everything at once. Don't do that.

Technical SEO is a marathon, not a sprint. If you overwhelm your dev team with 100 tickets, nothing gets done. If you fix low-impact issues first because they're easier, you waste time while critical problems continue tanking your traffic.

Pick your battles. Fix what matters most. Measure the impact. Then move on to the next tier.

The Tools That Actually Help

I'm not here to pitch specific tools, but you need at least a few essentials. I also provided a resource to provide you with the Best SEO Platforms for AI Visibility.

A crawler (Screaming Frog, SEMRush, or Botify for enterprise)

Google Search Console (obviously)

PageSpeed Insights or Lighthouse for Core Web Vitals

A log file analyzer if you're working on a large site

Need help prioritizing technical fixes for your site? I work with businesses to develop clear, actionable technical SEO strategies that focus on what actually moves the needle. Let's talk about your site architecture.